OpenAI’s Product Leader Shares a 10-Step Guide to Finding PMF for AI Products

From narrowing your ICP to building moats early, this definitive 10-step framework shows how to move from flashy AI demos to trusted, defensible products that customers can’t live without.

Welcome to NEW ECONOMIES, where we decode the technology trends reshaping industries and creating new markets. Whether you're a founder building the future, an operator scaling innovation, or an investor spotting opportunities, we deliver the insights that matter before they become obvious. Subscribe to stay ahead of what's next alongside 75,000+ others.

P.S. When you become an annual paid subscriber, you automatically access these best-in-class AI tools for free — for 12 months.

Hey Readers 👋

This week, we are excited to welcome Miqdad Jaffer as a guest writer who works on OpenAI’s product team. Throughout this edition, Miqdad will be sharing his insights on how startups can achieve product–market fit.

In this edition, you will learn 👇

PMF in AI is continuous, not a one-time win.

Hype and capital don’t equal fit.

Define jobs-to-be-done, not “AI for X.”

Target high-pain, high-frequency, high-AI-advantage jobs.

Solve real bottlenecks, not demo features.

Start with an uncomfortably narrow ICP.

Focus on edge cases to build trust.

Add a trust layer before scaling.

Track adoption depth, not vanity metrics, and price on value.

Build moats early with data, distribution, and feedback loops.

Let’s dive in 🚀

A quick word from this week’s sponsor: Every

Every has worked with over 800 startups—from first-time founders at pre-seed to fast-moving teams raising Series A and beyond—and they would love to help you navigate whatever’s next.

Here’s how they’re willing to help you:

Incorporating a new startup? They’ll take care of it—no legal fees, no delays.

Spending at scale? You’ll earn 3% cash back on every dollar spent with their cards.

Transferring $250K+? They’ll add $2,000 directly to your account.

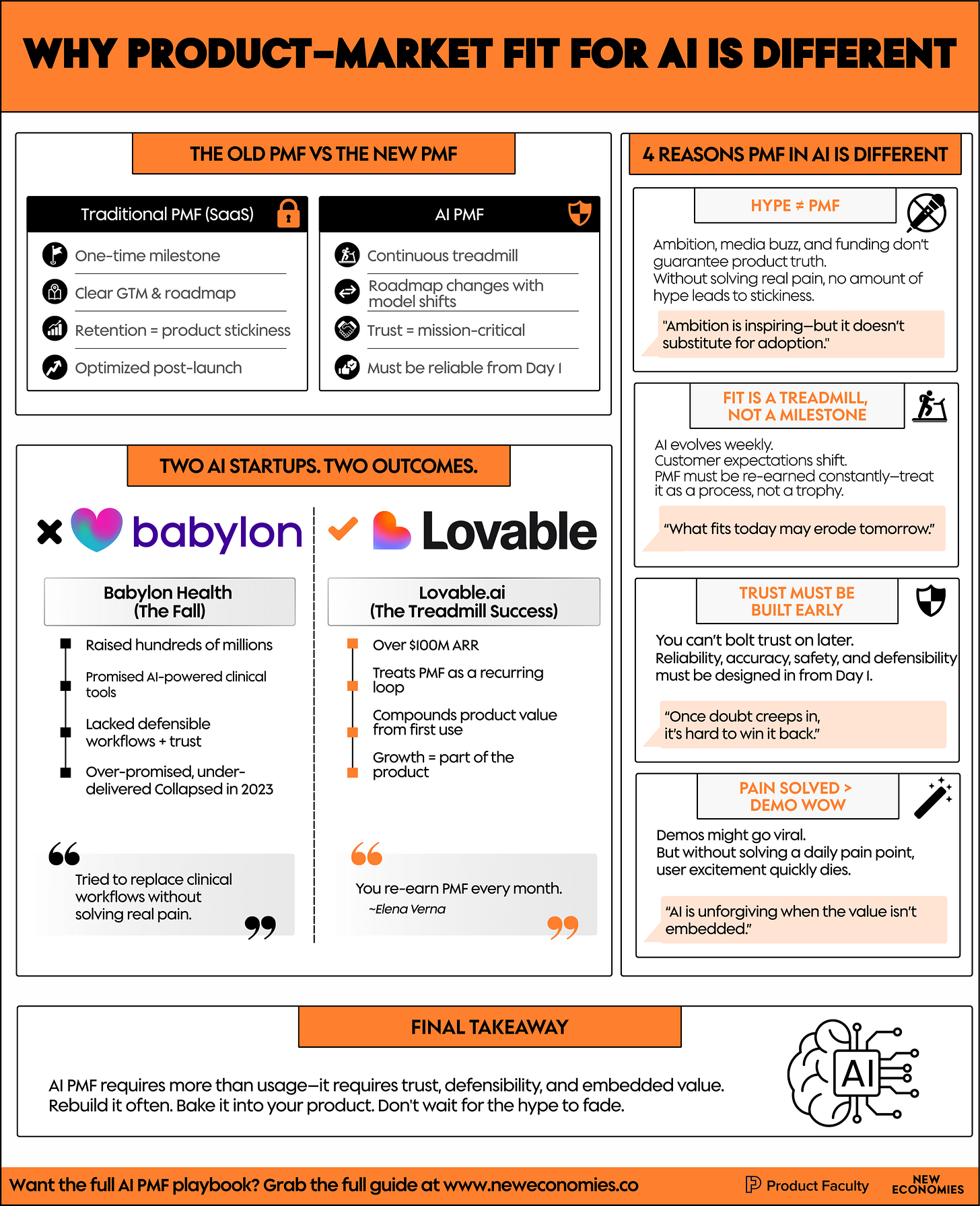

Why PMF for AI Is Different

Every founder, no matter what industry they come from, has heard the well-worn advice repeated on every stage and in every startup book: “Find Product-Market Fit, and everything else will follow.”

The phrase has become so ingrained in startup culture that it is often thrown around without much thought, as if PMF is a universal recipe you can apply in the same way whether you are building a SaaS tool for HR managers, a marketplace for second-hand goods, or an AI-powered copilot for doctors.

But what most people don’t realize and what far too many founders learn the hard way… is that the traditional ways of finding PMF, the methods drawn from the last two decades of SaaS and consumer playbooks, do not map cleanly onto the unique dynamics of AI products.

To see that in action, let’s look at Babylon Health, once one of the UK’s most celebrated AI-enabled startups. At its peak, Babylon was valued at over $2 billion and was seen as the future of healthcare delivery… promising AI symptom checkers, remote patient monitoring, and even national health contracts. It raised hundreds of millions in funding, went public via a SPAC, and was hailed as proof that AI could transform entire industries. But in 2023, it all unraveled. Its U.S. operations filed for bankruptcy, its U.K. arm collapsed into administration, and what was once a unicorn was sold for scraps.

What went wrong? The failure was not entirely about having insufficient capital; rather, it was about not having product-market fit steeped in concrete defensibility, not having reliable trust in high-stakes outputs, over-promising versus under-delivering, and most critically, trying to apply AI as though it can replace entire clinical workflows without deeply solving the actual pain points.

Now contrast that with a more recent insight from Elena Verna, Growth Officer at Lovable, an AI company already past $100M ARR. In a widely shared LinkedIn post, she explained that in AI, PMF isn’t a milestone you reach once and lock in, it’s a treadmill. You re-earn it every month because customer expectations evolve, foundation models change, and competitors launch lookalikes overnight. In her words, growth in AI doesn’t sit in a separate “go-to-market” bucket; the product itself must deliver repeatable, compounding value from the very first use, and the growth team’s job is inseparable from the product team’s job.

These two stories… Babylon’s collapse, and Lovable’s evolving growth approach… contain the cautionary and instructive contrast that sets up why PMF in AI is different:

Ambition and hype are not PMF. You can raise massive funding, hire world-class teams, and capture headlines… but none of that proves your product solves a critical problem in a way users, customers, and stakeholders trust. Ambition is inspiring, but it doesn’t substitute for adoption that embeds into real workflows.

Fit is dynamic, not static. In AI, PMF is not a one-time milestone. Models evolve, regulatory environments shift, and user expectations rise as soon as they experience what’s possible. What feels like fit today may erode tomorrow if you treat it as a finish line instead of an ongoing process.

Trust and defensibility are front-loaded costs. In AI, you can’t wait until later to build reliability, safety, and unique moats. Accuracy, transparency, data capture, and distribution wedges must be designed into the product from the start, because once doubt creeps in or competitors copy your features, it’s nearly impossible to recover.

Pain solved > demo wow. AI is unforgiving when products live only in flashy demos. If you’re not eliminating real bottlenecks, delivering reliable outcomes, and embedding into daily workflows, excitement will fade and adoption will collapse.

That’s why this guide exists. I want to give you a clear, actionable, deeply practical set of steps so that if you’re building an AI product, you know exactly how to go from a shiny model wrapper to a defensible company with true product-market fit.

Side note: Miqdad Jaffer, Product Leader at OpenAI, is also running the world’s #1 AI PM Certification, a 6-week live program.

You’ll learn everything you need to master building, deploying, and scaling AI products from scratch.

The program has already trained 3,000+ AI PMs, earned 500+ glowing reviews, and helped dozens of alumni land promotions and new roles in AI-driven companies.

Claim your spot now with $500 off here.

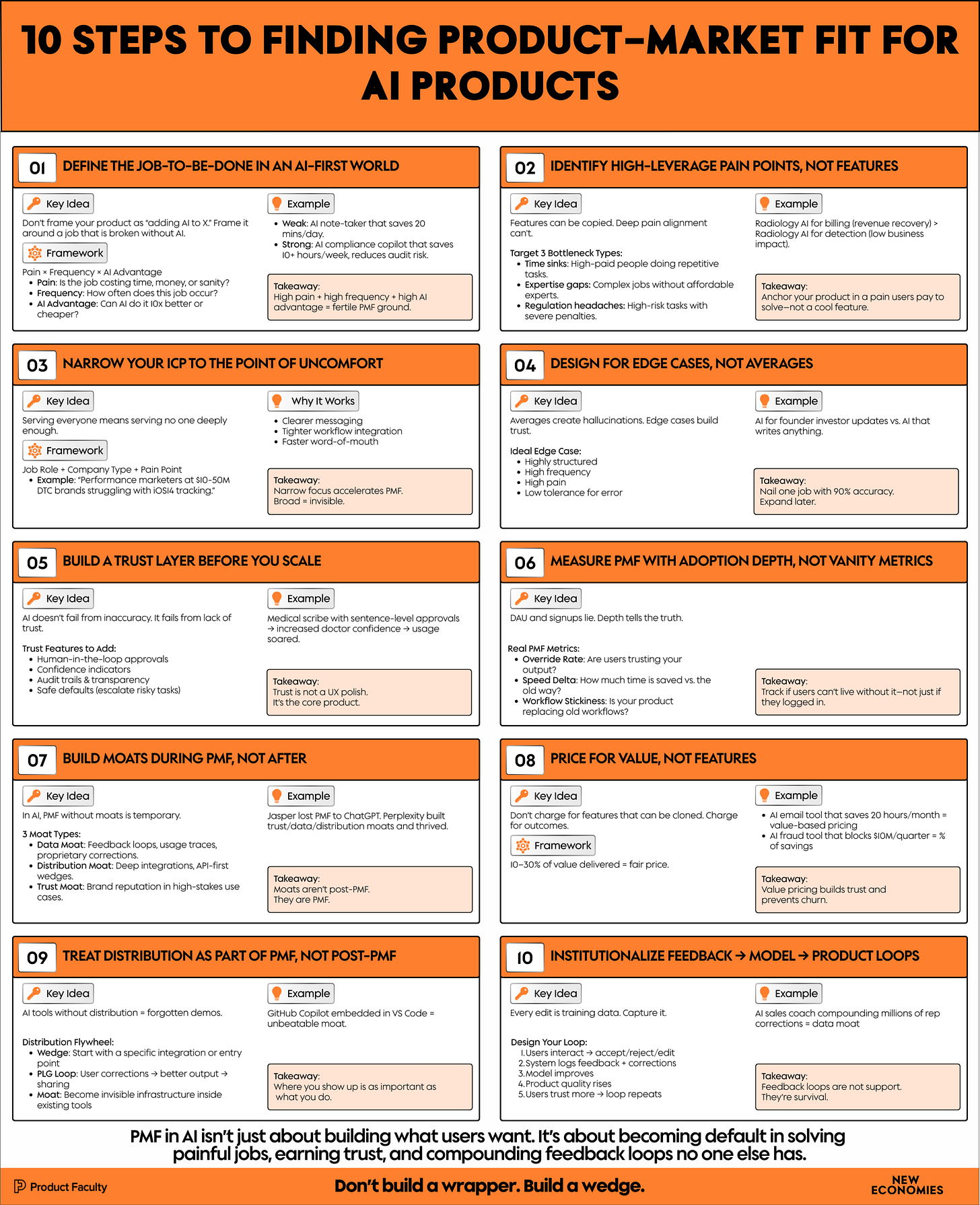

10 Steps to Finding PMF for Your AI Products

AI rewrites the rules of product-market fit. You can’t rely on hype, funding, or generic features when competitors can clone you overnight and users abandon tools that don’t solve real pain. What you need is a disciplined approach, one that focuses on sharp problems, trust, defensibility, and depth of adoption.

Here are the 10 steps this guide will walk you through:

Define the Job-to-be-Done in an AI-first World

Identify High-Leverage Pain Points, Not Features

Narrow Your Initial ICP to the Point of Discomfort

Design for Edge Cases, Not Averages

Build a Trust Layer Before You Scale

Measure PMF with Adoption Depth, Not Vanity Metrics

Build Moats During PMF, Not After

Price for Value, Not Features

Treat Distribution as Part of PMF, Not Post-PMF

Institutionalize Feedback → Model → Product Loops

Together, these steps form a playbook for going from a shiny AI demo to a defensible product that becomes a daily default in critical workflows.

Step 1. Define the Job-to-be-Done in an AI-first World

When most founders start building in AI, their instinct is to take something familiar… an existing product category, a workflow they already use, a popular SaaS tool… and simply add AI to it. “What if we added AI to note-taking?” “What if we added AI to CRMs?” “What if we added AI to customer service?” It sounds compelling, and it makes for slick demo videos, but here’s the truth: “add AI to X” is not a strategy.

Why not? Because what you’ve done in that framing is define your product around a technology, not around a job. You’ve anchored your vision on the presence of AI, not on the presence of pain. And if you’ve spent any time watching how users actually behave, you’ll know they don’t adopt technology for technology’s sake. They adopt it when it reduces pain so dramatically that not using it feels irrational.

The essence of finding PMF in AI starts with asking: What job is the user hiring this product to do? The job-to-be-done framework, long applied in traditional product management, takes on even greater importance in AI because the novelty of the technology can trick you into thinking you’re solving something valuable when in reality you’re just layering another tool onto a workflow people aren’t desperate to change.

Why “AI as a bolt-on” fails

Think of the dozens of “AI note-taking apps” launched in the past year. On the surface, they look impressive: connect your calendar, record meetings, get transcripts and summaries. But ask yourself: what job are they really doing for the user? Are they eliminating something that feels painful and urgent? Or are they just making an already tolerable task slightly more convenient?

For most knowledge workers, jotting notes or re-reading highlights is not the bottleneck that determines whether they hit their goals. The bottleneck is often elsewhere: aligning decisions, synthesizing complex inputs, producing compliance-ready documentation, or closing deals faster. So an AI product that frames itself as “AI for note-taking” ends up being a bolt-on convenience, not a must-have. Users try it, enjoy the novelty, and then revert to old habits.

Contrast this with a product designed around a native AI job… a job that is painful today, expensive today, and one where AI doesn’t just speed things up but collapses an entire process.

Finding where AI is native

The right question isn’t, “How can I add AI to X?”

The right question is: “What jobs exist in this industry that are structurally broken without AI?”

AI is native in problems where:

Information overload makes human effort costly (e.g., compliance officers reviewing thousands of transactions).

Pattern recognition across massive datasets outpaces human ability (e.g., fraud detection in payments).

Language or generation tasks are unavoidable bottlenecks (e.g., drafting regulatory filings, summarizing patient histories).

Decision support under uncertainty is frequent (e.g., prioritizing leads, predicting equipment failure).

When you find one of these jobs, AI doesn’t feel like an add-on, it feels like the only rational solution.

Example: Compliance documentation vs. note-taking

Consider two product ideas:

AI Notes App: You join meetings, the app transcribes and summarizes. The benefit? Saves you maybe 20 minutes per day, assuming you trust the output. The cost of not using it? You just keep writing notes yourself, a mildly annoying but manageable process.

AI Compliance Copilot: You work in financial services. Every week, you spend 3–5 hours preparing compliance documentation for audits, manually checking transactions, copy-pasting clauses, and formatting reports. An AI system reduces this to 5 minutes of review, ensuring accuracy and audit-readiness. The benefit? You save 10+ hours per week, reduce human error, and de-risk million-dollar penalties. The cost of not using it? You burn hours of high-paid employee time and expose yourself to legal risk.

One is a convenience. The other is survival. Which one do you think will achieve PMF faster?

This is the difference between AI as a bolt-on versus AI as a native solution. And it’s why your first step in building an AI product is not brainstorming features but identifying jobs where AI is not just helpful but transformative.

A simple framework: Pain × Frequency × AI Advantage

To systematize this search, I use a framework that evaluates jobs along three axes:

Pain: How costly is the job today if left unsolved? Does it waste time, money, or reputation? Would the user pay to have it eliminated?

Frequency: How often does this job occur? A rare pain, even if severe, might not support a company. The sweet spot is recurring pain that compounds over weeks and months.

AI Advantage: Can AI provide a step-change improvement, not just a marginal one? Does it reduce hours to minutes, increase accuracy by an order of magnitude, or make something possible that was previously impossible?

You can even think of it as a scoring exercise:

A high-pain, high-frequency job with little AI advantage is just a SaaS opportunity.

A high-AI-advantage, low-pain job is just a demo.

But a high-pain, high-frequency, high-AI-advantage job? That’s where PMF for AI products is born.

Take healthcare billing codes. Pain is high (errors cost billions), frequency is high (every patient visit), and AI advantage is real (models can process structured + unstructured notes faster than humans). That’s fertile ground.

Now compare that to email subject line generation. Pain is low (copywriters already manage), frequency is medium, and AI advantage is marginal (outputs are hit-or-miss). That’s demo territory, not PMF.

The subtlety: Don’t chase TAM, chase JTBD depth

One last nuance: AI founders often make the mistake of saying, “This job exists for everyone, so the TAM is huge.” But in AI, TAM is a trap. You don’t win by being horizontal from day one. You win by going deep into a job, mastering it, and embedding yourself so tightly that expansion becomes obvious later.

The goal of Step 1 is not to map the entire universe of AI opportunities. It’s to identify the one job that is so painful, so frequent, and so solvable with AI that your product has no choice but to become indispensable. Everything else — features, models, moats — flows from this foundation.

Step 2. Identify High-Leverage Pain Points, Not Features

When founders pitch AI products, I often hear them lead with features. “We built the fastest summarizer.” “Our model has 3% better accuracy.” “We integrated with Slack, so you can get results instantly.” On the surface, these sound compelling, they showcase capability and speed. But the problem is, features can be copied overnight. Pain points cannot.

Anyone can hook into OpenAI’s API and recreate your summarizer. Anyone can tack on a Slack integration. Even model accuracy improvements rarely create lasting differentiation, because competitors can either fine-tune on similar data or wait until the next base model release levels the playing field again.

What cannot be cloned so easily, however, is your deep alignment with a customer’s most painful, expensive, and high-leverage problems. That alignment is what unlocks adoption, retention, and eventually defensibility.

The trap of feature-first thinking

AI is seductive because features demo so well. Show a slick transcription, a perfect image generation, or a clean code suggestion, and people get impressed. But what happens after the demo?

Users ask:

“Does this solve my actual problem?

Does this remove the bottleneck I’ve been struggling with every week?

Does it save me money, reduce risk, or free up my time?”

If the answer is no, the feature doesn’t matter.

This is why many AI startups get stuck in “demo land.” They build clever outputs, win applause on social media, maybe even drive a wave of signups, but churn follows because the features don’t anchor to a painful enough problem. The output is interesting, not indispensable.

Bottlenecks: where pain compounds

So where should you look? Instead of chasing features, look for bottlenecks, those points in workflows where progress grinds to a halt, where time sinks are the norm, where expertise is scarce, or where mistakes carry outsized consequences.

Here are three categories of bottlenecks that consistently create high-leverage opportunities for AI:

Time sinks → Processes that consume hours of high-value talent. Example: Analysts spending days consolidating data into quarterly reports. AI can collapse this into minutes.

Expertise gaps → Tasks that require rare, expensive skills. Example: Contract review in legal teams. AI can democratize expertise by giving mid-level employees “senior-level” guidance.

Regulation headaches → Jobs where errors carry massive penalties. Example: Healthcare coding or financial compliance. AI can reduce human error and de-risk millions in fines.

When you find a bottleneck that sits at the intersection of these categories, you’ve found a high-leverage pain point that people will pay to eliminate.

Case study: Radiology AI

Let’s take radiology as an example. Many AI startups in healthcare have built imaging models with the pitch: “Our model detects tumors 5% more accurately than the state of the art.” Impressive technically, but in practice, this pitch often fails to drive adoption.

Why? Because accuracy alone doesn’t move the needle for hospitals in the way you think. Doctors already combine machine support with human judgment, and liability concerns mean a small accuracy bump isn’t enough to overhaul workflows.

Now consider a different angle: billing. In the U.S., radiology departments often lose millions each year to billing errors, mis-coded scans, missed documentation, inconsistent records. This is not a minor annoyance; it’s a direct financial pain point tied to hospital revenue and compliance.

An AI product that ensures every radiology report is coded correctly, compliant with insurance requirements, and audit-ready delivers not just “better accuracy” but millions in recovered revenue and reduced risk.

Notice the shift: same underlying technology (language + image models), but instead of focusing on a feature (diagnosis accuracy), the company aligns itself with a pain point (billing losses). The former excites researchers. The latter convinces CFOs to sign contracts. And in enterprise AI, CFO buy-in often determines survival.

Why pain beats features every time

When you build around features, you’re always vulnerable: the next model release, the next well-funded competitor, or the next API update can erase your advantage. But when you build around pain points, you’re resilient.

A feature-first company says: “We summarize meetings better than others.”

A pain-first company says: “We help sales teams recover 20% more pipeline by eliminating manual follow-ups.”

The second statement ties directly to business value. It’s hard to copy because it requires deep integration with customer context, not just API access. And most importantly, it creates urgency. Features invite curiosity. Pain points drive budgets.

How to uncover high-leverage pain points

Finding these pain points requires going deeper than surface-level user interviews. Most customers will say they want “better features” because that’s what’s visible. But your job as a founder is to dig past symptoms to root causes.

Ask:

What tasks do your users consistently procrastinate on? (signal: painful time sinks)

What errors have cost them real money or reputation? (signal: regulation headaches)

What expertise do they wish they had on staff but can’t afford? (signal: expertise gaps)

What’s the worst-case scenario if this job is done poorly? (signal: high leverage)

A useful exercise is to map your target customer’s workflow step by step and highlight the moments of friction, delay, or risk. Often, the highest-leverage opportunities are not where users say they want help, but where the workflow bottlenecks naturally cluster.

Your job is not to showcase how fancy your model is. Your job is to uncover the one or two bottlenecks in your customer’s life that are so painful, so recurring, and so costly that solving them with AI makes your product an immediate necessity. Features get copied. Pain points don’t.

And once you anchor your product around a high-leverage pain point, every feature decision, every integration, and every pricing strategy becomes easier. You stop building “yet another AI tool,” and you start building a painkiller. And painkillers — unlike vitamins, find product-market fit much faster.

Step 3. Narrow Your Initial ICP to the Point of Uncomfort

One of the most common mistakes I see AI founders make is starting far too broad.

They’ll say things like, “Our AI tool is for all marketers.” Or “Our product helps every salesperson.” Or worse, “Anyone who writes can use this.”

On the surface, broad markets sound attractive. After all, the Total Addressable Market (TAM) looks enormous. Investors nod when you say “billions of potential users.”

But the brutal truth is this: the broader your initial ICP, the weaker your adoption will be. When you serve everyone, you serve no one deeply enough to matter.

In AI, this mistake is magnified. Why? Because users have already been flooded with generic AI apps. They’ve seen dozens of AI tools that claim to help “marketers,” “students,” or “founders.” They no longer care about horizontal promises.

They care about whether your product solves their exact, painful problem better than anything else. And that requires you to define your ICP so narrowly that it almost feels uncomfortable.

Why narrowing feels counterintuitive

Most founders resist narrowing their ICP because it feels like limiting upside. If you say your tool is only for “performance marketers at $10–50M DTC brands,” you worry you’re cutting out 95% of the market. If you say your AI forecasting tool is only for “gaming PMs,” you fear investors will think it’s too niche.

But here’s the paradox: the narrower you go at the start, the faster you find PMF. Why? Because when you define your ICP precisely, three powerful things happen:

You speak their language. Instead of vague messaging like “AI for marketers”, you can say “AI that fixes post-iOS14 attribution leaks for DTC brands.” The latter hits like a lightning bolt because it mirrors the exact pain your ICP feels.

You embed deeply into workflows. When you build for everyone, you design shallow features that kind of work for many. When you build for a narrow ICP, you can tailor integrations, UI, and outputs exactly for their workflow, making the product indispensable.

You accelerate word-of-mouth. When you solve a niche group’s pain so well, they become evangelists. The DTC founder tells other founders at their mastermind. The gaming PM shares your tool in their Slack community. Early growth in AI doesn’t come from ads; it comes from tight communities spreading tools that actually work.

Example: Post-iOS14 marketers vs. “all marketers”

Let’s make this concrete. Imagine you’re building an AI analytics tool. You could pitch it as “AI that helps marketers optimize campaigns.”

Sounds fine, but it’s vague. Which marketers? Paid search? Brand marketers? Social media managers? Each has different workflows, pains, and budgets.

Now let’s narrow. You choose performance marketers at $10–50M DTC brands struggling with post-iOS14 tracking. Suddenly, everything sharpens:

Pain clarity: Post-iOS14, attribution has become unreliable. Performance marketers at this revenue band are losing millions to inefficient ad spend and have budgets to fix it.

Workflow clarity: These marketers live in Meta Ads Manager, Google Analytics, and Shopify. You can build integrations directly into their stack.

Message clarity: Your pitch becomes, “We help $10–50M DTC brands recover lost performance by fixing attribution leaks caused by iOS14.” That is ten times more compelling than “AI for marketers.”

By narrowing, you don’t shrink your chances of success. You increase them, because now your product has a chance to become a “must-have” for a well-defined group.

The discomfort test

Here’s a rule of thumb I share with founders: if your ICP definition doesn’t feel uncomfortably narrow, it’s not narrow enough.

Saying “marketers” is too broad.

Saying “performance marketers” is still too broad.

Saying “performance marketers at $10–50M DTC brands struggling with post-iOS14 tracking”, that’s the level of specificity you need.

Why? Because when you pitch to that group, you’ll see heads nod immediately. You’re describing their exact life, not some abstract persona. And that resonance is the first signal you’re on the right track toward PMF.

ICP narrowing as an accelerant

In SaaS, you can sometimes start broad because horizontal products (like Slack or Dropbox) can ride network effects or virality. But in AI, where novelty fades fast and features are easy to clone, narrow ICP is your survival. It forces you to build a product so deeply valuable to a niche group that they can’t live without it. And from there, you earn the right to expand.

So don’t ask, “Who could use this?” Ask, “Who needs this so badly they’ll pay tomorrow?” The answer will almost always be a smaller group than you first imagined. That’s the group that will give you the traction, feedback, and revenue to survive. And ironically, that’s also the group that will one day allow you to grow big.

Step 4. Design for Edge Cases, Not Averages

In traditional SaaS, finding PMF usually means building for the averages. You identify the most common workflows, you optimize for the broad middle of user behavior, and you ensure the 80% of everyday use cases are covered.

Customers accept that edge cases will require workarounds, because SaaS is deterministic… if the button is there and the workflow is supported, it works every time.

AI flips this logic on its head. If you try to build for averages in AI, you will fail, because averages are exactly where models struggle. The broader the scope, the more room there is for hallucinations, unpredictable outputs, or disappointing mediocrity.

Instead, AI products find PMF fastest when they are designed for edge cases, those highly specific, constrained, and predictable jobs where models shine far brighter than humans.

Why averages don’t work in AI

Think of a general-purpose AI writing tool. You pitch it as: “Write anything — emails, essays, blog posts, sales copy, social media.” That sounds powerful, but it immediately creates two problems:

Output quality is inconsistent. Writing a technical blog post requires different tone, accuracy, and context than writing a birthday invitation. Averages stretch the model too thin. Users encounter errors, awkward phrasing, or hallucinated facts, and they lose trust.

Value is unclear. When you promise “write anything,” you’re competing against dozens of similar tools. Users don’t know why they should pick yours, because it doesn’t solve a specific pain point better than alternatives.

The result? Curiosity-driven signups, weak retention, and churn.

Edge cases as a wedge

Now contrast this with an AI product designed around a narrow edge case: drafting investor updates for founders. This job has unique qualities:

80% of the content is predictable - growth metrics, recent milestones, upcoming goals.

Founders dread writing it because it’s repetitive and time-consuming.

Accuracy matters, but the structure and context reduce the risk of hallucination.

The output doesn’t need to be perfect prose, it just needs to be a solid draft the founder can polish in minutes.

By focusing on this edge case, the product doesn’t try to be everything to everyone. Instead, it becomes magical for a small but important workflow. And once a founder uses it and realizes it saves them two hours per month while still keeping investors happy, the product quickly embeds into their routine.

This is the pattern: AI products win by excelling in narrow, structured jobs where human pain is high and model predictability is high.

Why this builds trust faster

One of the most dangerous pitfalls in AI PMF is what I call “hallucination shock.” This is the moment when a user, initially excited by your product, suddenly realizes they can’t trust it. Maybe the AI lawyer drafts a contract with a fake clause. Maybe the AI researcher invents citations. Maybe the AI doctor suggests a dangerous dosage. Even a single shocking error can destroy trust, and once trust is broken, it’s almost impossible to rebuild.

Designing for edge cases mitigates this risk. When you choose jobs where 80% of the context is predictable and the constraints are narrow, the model has less room to go off the rails. The outputs feel consistently reliable, which accelerates trust-building. Users start thinking: “I can count on this tool for this specific job.” That’s the first step toward making your AI product a default.

Edge cases compound into averages

Here’s the counterintuitive part: starting with edge cases doesn’t limit your growth, it accelerates it. Because once you dominate one edge case, you earn the right to expand into adjacent ones.

From “AI for investor updates,” you can move into “AI for board reports.”

From “AI for LTV projections in gaming,” you can expand into “AI for churn modeling in SaaS.”

From “AI for compliance documentation in finance,” you can broaden into “AI for audit preparation across industries.”

The sequence matters. If you start broad, you drown in averages and lose trust. If you start narrow, you build depth, win advocates, and then expand outward.

Practical test: the edge case filter

Here’s a simple test: when you define your product’s job, ask yourself — “If I gave this AI tool to ten random users in the broad market, would the outputs delight all ten? Or would five get annoyed and lose trust?” If the latter, you’re chasing averages.

Now reframe: “If I gave this tool to ten users in a specific edge case, like compliance managers at fintech startups preparing SOC2 reports, would nine out of ten say this saves them hours and feels reliable?” If yes, you’ve found an edge case worth building around.

The essence of AI PMF is embedding into workflows. And embedding starts with trust. You don’t earn trust by trying to do everything; you earn it by doing one painful job so well that users can’t imagine working without you. That’s why in AI, you must design for edge cases, not averages.

Build where models shine. Build where hallucination risk is low. Build where the job is narrow but the pain is high. That’s how you create trust loops, wedge into workflows, and eventually scale from “AI demo” to “AI default.”

Step 5. Build a Trust Layer Before You Scale

In SaaS, scaling is often about adding features, tightening onboarding, and pumping money into distribution. If your tool helps sales teams save time or makes HR workflows easier, customers will forgive imperfections along the way, as long as you’re responsive and moving fast.

But in AI, the rules are different. You cannot scale without trust. And trust is not a marketing slogan, it is a product layer.

Why trust is the real product

Here’s the uncomfortable truth: AI adoption rarely fails because of accuracy. It fails because of trust gaps. Your model could be 95% accurate, but if the 5% of failures are catastrophic — hallucinated citations, fabricated financial figures, incorrect medical terms — users will abandon you instantly. In AI, perceived reliability matters more than technical accuracy.

Think about it: when you drive a car, you don’t need it to be flawless in design or efficiency. What matters is that every time you hit the brakes, it stops. If it only failed 5% of the time, you’d never get behind the wheel. That’s the mental model users apply to AI, they’re willing to accept imperfection as long as they can trust the system to fail safely and transparently.

That’s why every successful AI product I’ve worked with has invested early in what I call a trust layer: the scaffolding around the core model that gives users confidence in its outputs, even when the model itself is fallible.

What a trust layer looks like

A trust layer isn’t just one feature. It’s a combination of design, transparency, and workflow choices that shift the product from “magical demo” to “dependable tool.”

Here are some common components:

Human-in-the-loop controls → Users get the ability to review, accept, or reject AI outputs before they become final.

Example: A medical scribe that highlights each sentence for doctor approval instead of auto-submitting notes.

Confidence indicators → Show uncertainty or highlight risky sections.

Example: An AI legal reviewer that flags clauses it is “less certain about,” prompting human attention.

Explainability hooks → Let users drill into why an answer was given.

Example: An AI fraud detector that provides “reason codes” tied to transaction anomalies.

Audit trails → Log every suggestion, correction, and override for accountability.

Example: An AI compliance tool that timestamps every user edit to prove regulatory diligence.

Safe defaults → When uncertain, the system defaults to non-destructive outputs.

Example: An AI customer support agent that escalates tricky cases to humans instead of improvising.

Each of these adds friction in the short term… but that friction creates confidence, and confidence accelerates long-term adoption.

Case example: The medical scribe company

One company in the healthcare space learned this the hard way. Their first product automatically generated patient notes from doctor-patient conversations. It was fast and technically impressive. But adoption stalled. Doctors didn’t trust the system, because even if 95% of the notes were correct, the 5% errors could lead to malpractice.

The company pivoted to include a trust layer: instead of auto-submitting, the tool displayed each generated sentence with a simple “approve” or “edit” option. What happened? Initially, this slowed down usage slightly. But within weeks, doctors reported higher confidence, and as they saw fewer errors over time, they began approving most sentences automatically. Within months, the same doctors were saying, “I can’t imagine writing notes manually anymore.”

The paradox is that by adding friction, the company built trust. And by building trust, they unlocked scale.

Why scaling without trust fails

I’ve seen dozens of AI startups chase scale too early. They raise funding, pour money into ads or outbound sales, and get initial signups. But without a trust layer, usage stalls. Early adopters encounter hallucinations, lose confidence, and churn. Word-of-mouth turns negative. Scaling amplifies distrust instead of adoption.

The lesson: if you scale before building trust, you’re just scaling churn. And once a trust gap spreads in your market, rebuilding reputation is nearly impossible.

How to measure trust in AI products

Unlike SaaS, where you can look at DAUs or retention curves, trust needs its own metrics. Some signals I recommend tracking include:

Override rate → How often users accept outputs without changes. Lower override = higher trust.

Correction velocity → How quickly users edit errors. Faster edits suggest users are still engaged despite flaws.

Escalation ratio → How often users escalate AI tasks to humans. Declining ratios = rising trust.

Workflow replacement → Are users replacing old workflows with your AI product, or using it as a novelty sidekick?

Trust is not binary, it’s a curve you can measure, improve, and reinforce.

The compounding nature of trust

Here’s the beauty of trust in AI: once established, it compounds. Every user correction improves the model, every improvement strengthens reliability, every reliable moment deepens habit. Over time, trust shifts from “I review everything the AI produces” to “I trust it for 80% of the job” to “I can’t imagine working without it.”

And once your product reaches that threshold, competitors can’t just swoop in with a shinier demo. Trust is sticky. Trust is a moat.

Your temptation might be to chase more users, more demos, more features. Resist it. Instead, focus on building the trust layer that makes your early users confident advocates. Without it, you’ll forever be a demo. With it, you can scale into a default.

In AI, trust isn’t a side benefit, it’s the core product. Build it before you scale.

Step 6. Measure PMF with Adoption Depth, Not Vanity Metrics

In SaaS, the playbook for measuring product-market fit is well established. You look at DAU/WAU ratios, you measure retention curves, you send Net Promoter Score (NPS) surveys, and you see how many users would be “very disappointed” if your product disappeared. These metrics are useful for deterministic software, where features either work or they don’t.

But in AI, these same metrics can be dangerously misleading, because AI products don’t live or die by activity or sentiment, they live or die by depth of trust and workflow adoption.

If you rely on vanity metrics in AI, you’ll fool yourself into thinking you have PMF long before you do.

The vanity metric trap

Let’s start with the numbers most founders obsess over:

Daily Active Users (DAU): Someone logs in daily. But why? Are they actually using your product for their core workflow, or are they just poking around out of curiosity? Many AI tools see a surge of DAU after launch, only to plummet when the novelty wears off.

Retention curves: Someone comes back every week. But what are they doing? A few casual interactions don’t mean your product has become indispensable.

NPS surveys: A user says they “love” the product. But does “love” translate into trust and reliance, or just admiration for a cool demo?

I’ve seen AI companies raise millions on the back of vanity metrics, only to collapse months later because those numbers masked the lack of true workflow embedding.

Why AI needs different signals

AI products are probabilistic. Outputs vary. Trust builds slowly. Adoption happens in layers, not in a single leap. That means you need metrics that capture not just if people are using your product, but how they are using it, and how deeply it has replaced their old way of working.

Three core depth metrics for AI PMF

Override Rate

Definition: The percentage of AI outputs that users accept without editing.

Why it matters: A low override rate means users don’t trust the system yet; they’re correcting too much. A declining override rate over time means users are trusting more and embedding your tool deeper.

Example: An AI legal drafting tool may start with 70% of outputs heavily edited. As the model improves and trust builds, that drops to 30%. That decline is far more meaningful than raw DAU growth.

Speed Delta

Definition: The time saved compared to the old workflow.

Why it matters: If your product saves users 5 minutes, that’s a convenience. If it saves them 5 hours, that’s a workflow revolution. Speed delta quantifies your value beyond novelty.

Example: An AI compliance tool reduces quarterly reporting from 3 weeks of work to 2 days. That speed delta signals transformative PMF.

Workflow Stickiness

Definition: The extent to which your product has become the default way of completing the job.

Why it matters: Users may try AI once and then revert to old tools. Stickiness measures whether they’ve abandoned the old way entirely.

Example: A customer support team using AI agents for 80% of tickets has achieved stickiness; if they’re only using it for 10% of tickets, you’re still in demo land.

These metrics get at the heart of AI PMF: are you replacing painful workflows, saving significant time, and earning trust at scale?

Case example: The AI legal startup

One AI legal startup had an early growth surge. Thousands of lawyers signed up. Retention looked healthy. NPS scores were high. But when we dug deeper, we saw override rates near 90%. Lawyers were rewriting almost every clause the system generated. The product wasn’t actually reducing workload, it was just creating more editing.

This company didn’t have PMF. They had curiosity-driven usage. It wasn’t until they narrowed to a specific edge case (compliance contracts) and got override rates below 30% that adoption truly stuck. Within months, law firms began canceling competing software, because now the AI tool was saving real hours and reducing error risk. That was the real PMF signal, hidden beneath vanity metrics.

Building an AI PMF dashboard

Here’s a simple way to measure adoption depth: build a dashboard that tracks:

Override rate (is trust building?)

Time-to-complete (is speed increasing?)

% of jobs completed fully in your product vs. old workflow (is stickiness rising?)

Repeat job usage (are users bringing recurring jobs back to your tool, not just trying it once?)

If these numbers improve month over month, you’re on the path to PMF — even if DAUs aren’t skyrocketing. If they stagnate, you’re stuck in novelty territory.

Why adoption depth matters more than growth

Growth can be manufactured. You can buy ads, do outbound sales, or launch on Product Hunt and see thousands of signups. But depth cannot be faked. Either your product becomes the default way a user solves their painful job, or it doesn’t.

Depth is also your best defense. Competitors can copy your features, but they cannot easily dislodge a product that has embedded itself deeply into a workflow. If your AI saves a compliance officer 10 hours per week and they trust it fully, no shiny demo will tempt them away.

At this stage of your journey, the question isn’t, “How many users do we have?” The question is, “How many users can’t live without us?”

Vanity metrics will give you false confidence. Depth metrics will tell you the truth. And in AI, the truth is that PMF is measured not by how many people try your product, but by how deeply it becomes the default for the painful jobs they care most about.

Adoption depth, not surface activity, is the signal. Track it, optimize for it, and let it guide you to real PMF.

Step 7. Build Moats During PMF, Not After

In most startup advice, moats are treated as something you worry about later. First, get traction. Then, once you’ve hit product-market fit, think about defensibility. That logic holds in SaaS, where building features takes time, competitors can’t instantly copy your code, and first-mover advantage buys you breathing room. But in AI, this advice is fatal. If you wait until after PMF to build moats, you may never get the chance.

Why? Because in AI, your “technology advantage” is vanishingly small. The models you’re using are not yours — they’re APIs anyone can access. Even if you train your own, improvements in foundation models will quickly erode your edge. Your competitors can clone your features in weeks, sometimes days. Which means that unless you build moats into your product during the PMF process itself, you risk being leapfrogged the moment you prove there’s money to be made.

The myth of “we’ll add moats later”

I can’t count how many AI founders I’ve heard say something like: “We’ll focus on getting users now, and once we hit PMF, we’ll start thinking about defensibility.” This mindset works in SaaS because the very act of building PMF creates defensibility: you accumulate switching costs, integrations, and data over time almost by default. But in AI, novelty attracts users fast — and competitors just as fast.

Imagine this: you launch an AI customer support tool. Early traction is strong. Churn is manageable. Investors get excited. But you haven’t built any unique distribution, you haven’t captured proprietary data, and your brand doesn’t carry trust in high-stakes contexts. Within six months, three better-funded competitors release nearly identical products. Suddenly, your “PMF” vanishes because customers see no reason to stay with you.

This is why AI companies die even after “finding traction”: they mistake early usage for defensible adoption. Without moats, PMF is temporary.

Three categories of AI moats

To avoid that fate, you must weave moats into your PMF journey. The strongest AI moats tend to fall into three categories: data, distribution, and trust.

Data Moat → proprietary feedback loops, unique datasets, or structured outcomes that competitors can’t access.

Example: An AI sales coaching tool that improves by analyzing millions of call transcripts corrected by actual reps. Each correction makes the product smarter in ways competitors can’t replicate without the same dataset.

Why it matters: APIs are open to everyone, but your dataset is not. Data becomes a compounding advantage if you start collecting it early.

Distribution Moat → embedding so deeply into workflows, integrations, or ecosystems that switching costs become prohibitive.

Example: An AI compliance product that integrates directly into Epic hospital systems or SAP financial systems. Even if a competitor matches your features, replacing you would require ripping out workflow-critical integrations.

Why it matters: The more your product becomes invisible infrastructure inside critical workflows, the harder it is to displace.

Trust Moat → reputation built in high-stakes environments where mistakes are costly.

Example: In healthcare or legal AI, once your brand is seen as the “safe” choice, competitors face years of uphill battle to convince customers they’re equally reliable.

Why it matters: Trust cannot be bought. It must be earned slowly, through consistent reliability. And once you have it, it’s one of the stickiest moats of all.

Case example: Jasper vs. Perplexity

Take Jasper, one of the earliest AI writing startups. They raised $125M at a $1.5B valuation, riding the wave of “AI for copywriting.” But their moat was thin. They had early traction, but no proprietary data loops, no unique distribution wedge, and limited trust differentiation. When OpenAI’s ChatGPT went mainstream, Jasper’s differentiation collapsed.

Now compare Perplexity. They weren’t first to market. But they built a distribution moat (mobile-first app with habit loops), a trust moat (clear citations for every answer), and a data moat (user feedback loops on search quality). As a result, even as dozens of competitors emerged, Perplexity carved out a defensible position that continues to grow.

The lesson is clear: traction without moats is fragile. PMF without defensibility is a mirage.

How to build moats during PMF

So how do you avoid the Jasper trap and build moats while you’re still searching for PMF? Here are a few strategies:

Instrument feedback from day one. Every correction, rejection, or approval should be captured. That data is the raw material for your moat.

Go where others can’t. Choose distribution wedges that competitors can’t easily copy. For example, embedding into industry-specific workflows or securing exclusive partnerships.

Make trust visible. Build transparency features (citations, audit logs, confidence scores) that not only reduce hallucination risk but also establish your brand as the “safe” choice.

Tie value to outcomes. If your product saves money or reduces risk, build contracts and case studies that showcase it. This strengthens trust and creates switching friction.

The compounding effect of early moats

The earlier you build moats, the faster they compound. A feedback loop started with 10 customers can evolve into a dataset of millions of interactions by the time you hit scale. A niche distribution wedge can expand into an enterprise-wide lock-in. A reputation for reliability in one critical edge case can expand into adjacent domains.

Moats are not something you bolt on after PMF. In AI, moats are part of PMF.

At this stage of your journey, your product may feel fragile. You’re chasing adoption, refining edge cases, and proving value. But even now, you should be asking: “What part of this product will still be defensible a year from now, when competitors clone our features?”

If you’re not building moats while finding PMF, you’re building for someone else’s future. They’ll copy your idea, leverage their distribution, and own the market you proved was valuable. But if you weave data, distribution, and trust moats into your PMF process, you’re not just finding fit, you’re building foundations competitors can’t easily shake.

In SaaS, moats protect you after you win. In AI, moats are how you win.

Step 8. Price for Value, Not Features

In SaaS, pricing often starts with features. You see the classic three-tier plans: Basic, Pro, Enterprise. Each tier unlocks more features — more seats, more storage, more integrations. Customers compare plans like a menu and decide based on what they need. This works because SaaS features are relatively sticky: it takes real engineering work to copy them, and over time, feature depth becomes a defensible moat.

In AI, this approach is a trap. Features commoditize too quickly. The summarizer you charge $20/month for today will be free inside Google Docs tomorrow. The chatbot you sell per seat will be cloned in Slack within weeks. If your pricing is anchored to features, you’ll always be in a race to the bottom.

Instead, AI products must price against value — the measurable, undeniable outcomes your product delivers. Not how many features you offer, not how many seats are on the account, but how much time, money, or risk you eliminate.

Why feature-based pricing fails in AI

Let’s take a simple example: an “AI email assistant” that drafts replies. You price it at $10/month per user. At first, customers pay. But then competitors offer the same at $5, or bundle it into Gmail for free. Suddenly, your pricing looks ridiculous. You’re left discounting just to survive.

The problem isn’t your product — it’s your pricing logic. You priced it as if the feature itself was the value. But in AI, features are never the value. The value lies in the outcomes the feature enables.

What value-based pricing looks like

Imagine instead that you position your AI email assistant not as “unlimited AI-drafted emails for $10/month” but as “we cut your average support rep’s response time in half, reducing staffing needs by 20%.” Suddenly, you can price based on savings, not features.

This is why the best AI companies don’t sell features — they sell outcome contracts.

An AI fraud detection tool doesn’t charge per seat. It charges as a percentage of dollars saved from blocked fraud.

An AI supply chain optimizer doesn’t charge by API call. It charges based on reduction in inventory waste or logistics costs.

An AI legal contract reviewer doesn’t charge per document. It charges relative to the billable hours replaced.

Pricing for value means your revenue scales with your customer’s savings, risk reduction, or speed gains. And unlike features, value cannot be commoditized away.

The heuristic: 10–30% of saved value

Over years of working with AI and enterprise clients, I’ve found a simple heuristic: AI products can usually capture 10–30% of the value they create.

If your AI tool saves a law firm $1M in annual billable hours, you can charge $100–300K.

If your AI tool prevents $10M in fraud losses, you can charge $1–3M.

If your AI tool saves a compliance team 2,000 hours a year, you can charge an amount equal to 200–600 of those hours in cost.

This anchors your pricing to impact, not to a feature menu. It also creates a natural fairness: customers are happy to pay because your price is directly proportional to the tangible value you deliver.

Case example: AI fraud detection vs. SaaS fraud dashboards

A traditional SaaS fraud dashboard might charge $50 per seat. But for enterprise clients, the number of seats is irrelevant… The fraud problem doesn’t scale linearly with user count. Meanwhile, an AI fraud detection system that blocks $5M in attempted fraud per quarter can justify a value-based contract worth hundreds of thousands, even if the “features” look similar on the surface.

The fraud dashboard sells features. The AI system sells outcomes. Guess which one has better retention and defensibility?

Why customers respect value pricing

At first, founders worry that value-based pricing will scare customers away. In reality, the opposite is true. When you tie your price to value:

You signal confidence. Customers see that you’re willing to be measured against outcomes.

You reduce negotiation friction. Instead of haggling over seats or features, you align on measurable business impact.

You build trust. Customers know you win only when they win.

This alignment builds stronger relationships and higher willingness to pay.

How to implement value-based pricing

Quantify the baseline. Measure how much time, money, or risk the current workflow costs.

Run pilots. Show measurable improvements (hours saved, dollars protected, errors reduced).

Frame contracts as ROI. Anchor pricing as a fraction of captured value (10–30%).

Use hybrid models if needed. For early-stage, combine a base fee (to cover costs) with performance-based upside.

Example: An AI supply chain startup charges $50K/year base + 15% of verified logistics savings. This covers infrastructure but also scales with customer outcomes.

The hidden moat of value pricing

Here’s the deeper insight: value-based pricing itself is a moat. When customers see that your product is tied to their business outcomes, it’s harder for competitors to undercut you with a cheaper per-seat plan. A competitor may offer the same feature at half the price, but if you’re delivering measurable ROI, your customer won’t switch. They’ll stay loyal, because outcomes are harder to fake than features.

At this stage of your journey, you may be tempted to slap a $20/month SaaS price tag on your AI product. Resist it.

Instead, ask: “What is the true value my product creates? How much time, money, or risk does it eliminate? What fraction of that value is fair for me to capture?”

If you price on features, you’ll always be under pressure. If you price on value, you’ll always be aligned with your customer. And alignment is what keeps you alive long after novelty fades.

In AI, features commoditize. Outcomes endure. Price for value, not for features.

Step 9. Treat Distribution as Part of PMF, Not Post-PMF

In traditional startup wisdom, distribution is often treated as a second act. First, you focus on finding product-market fit. Then, once you’ve proven that people want your product, you worry about growth, channels, and scaling. The underlying assumption is that if you’ve built something people love, distribution will come later, growth is just a matter of pouring fuel on the fire.

That logic does not hold in AI. In AI, distribution is part of PMF itself. Why? Because AI tools live in a noisy, crowded market where dozens of competitors can launch similar features overnight. Your product doesn’t get judged only on its outputs, it gets judged on where it shows up, how seamlessly it fits into workflows, and how quickly it becomes a habit. Without smart distribution from the start, even a great product will be treated as a toy: tried once, admired for novelty, and abandoned.

Why distribution can’t wait in AI

There are three reasons AI demands distribution-first thinking:

The novelty tax: Users are curious about AI, so they’ll try anything once. But curiosity-driven usage is fragile. Unless your product embeds itself where the job actually happens, users won’t return. Distribution is what turns curiosity into habit.

The clone problem: Features are easy to copy. What competitors can’t easily copy is your distribution wedge — whether that’s a deep integration, a unique GTM motion, or a community-driven loop.

Workflow gravity: People don’t want another app. They want AI where they already work. If you force them into a new silo, they’ll try it briefly, then default back to their familiar stack. Distribution is what makes your product invisible infrastructure instead of a separate tool.

The distribution wedge → PLG loop → moat flywheel

Think about distribution not as an afterthought but as a three-stage system that runs in parallel with PMF:

GTM wedge: The first, narrow entry point where you land inside a customer’s workflow. This could be an integration, a Chrome extension, or a single API that solves one job.

Example: An AI compliance startup didn’t launch a standalone app. Instead, they built a plug-in inside Epic hospital software. That wedge gave them instant credibility and adoption.

PLG loop: Once you’ve landed, you need a loop that spreads usage naturally. In SaaS, this might be viral invites. In AI, it’s often user corrections, shared outputs, or network effects from embedded data.

Example: Perplexity’s mobile app makes every search shareable with citations. That loop spreads trust and drives organic installs.

Moat flywheel: Over time, your distribution itself becomes defensible. The more users embed you into workflows, the harder it is to rip you out. The more outputs get shared, the more data and trust you accumulate.

Example: GitHub Copilot became sticky not just because of good completions, but because it embedded itself inside VS Code — a distribution moat competitors couldn’t easily replicate.

Case example: Standalone app vs. embedded API

One AI startup tried to launch a standalone app for hospital documentation. The value was clear, but adoption was weak. Why? Because doctors didn’t want to leave their EHR (Electronic Health Record) systems to use a separate tool. The product was technically impressive, but distribution was misaligned.

They pivoted: instead of an app, they built an API that embedded directly inside Epic and Cerner (the dominant EHR systems). Overnight, adoption surged. Same underlying value, but the distribution wedge changed everything. Doctors no longer had to change behavior — the AI showed up where they already worked.

The lesson: in AI, distribution is not a growth channel — it’s part of the product experience.

Practical ways to build distribution into PMF

Embed, don’t replace. Find the tools your ICP already uses daily and integrate directly. AI that feels invisible will win over AI that demands new habits.

Leverage correction loops. Every time a user edits an AI output, that action can improve the model, generate better outputs, and attract more usage. Distribution and product improve in lockstep.

Design for shareability. Outputs that can be shared with colleagues, clients, or communities create natural word-of-mouth. Transparency (citations, audit logs) amplifies credibility.

Pick a wedge no one else is chasing. Instead of chasing broad “AI for everyone” launches, pick narrow channels. For example: “AI that plugs into Shopify Flow for mid-market DTC brands.”

Why scaling without distribution alignment fails

I’ve seen AI founders hit early traction, then pour money into paid acquisition. The result? Lots of signups, weak activation, high churn. Because even if users show up, the product isn’t where the job happens. No distribution wedge, no habit, no stickiness. Scaling only amplified the leaks.

The companies that survive flip the equation: they obsess about embedding into workflows and building natural loops first, then scale only once they know every new user is likely to stick.

At this stage of your AI journey, don’t treat distribution as “what comes later.” Treat it as part of your product-market fit search. Ask yourself: “Where does my product naturally live? What’s the wedge that gets me inside the workflow? What loops will spread usage once I land?”

If you answer those questions, your product won’t just be tested — it will be adopted, embedded, and defended. And in AI, where attention is fleeting and clones are everywhere, that’s the difference between being a demo and being a default.

Distribution isn’t the sequel to PMF. In AI, distribution is PMF.

Step 10. Institutionalize Feedback → Model → Product Loops

If there’s one truth that separates fleeting AI demos from durable AI products, it’s this: your product is only as strong as the feedback loops you institutionalize. In SaaS, product feedback is about features. Users ask for new buttons, better dashboards, or integrations. You prioritize the roadmap, ship updates, and customers see progress. In AI, feedback is not just about features — it’s about truth. Every correction, rejection, or acceptance from a user is a signal that can (and must) feed directly back into the model.

This creates a unique dynamic: every user interaction isn’t just usage, it’s training. If you capture and leverage it, you compound. If you don’t, you stagnate — and your competitors who build these loops will quickly surpass you.

Why feedback loops are different in AI

In AI, performance doesn’t plateau because of features — it plateaus because models stop improving relative to real-world use. You may think you’ve built something magical, but if your users are constantly overriding outputs and those corrections never flow back into your system, you’re leaving gold on the floor.

Consider two AI startups solving the same problem:

Startup A treats feedback as support tickets. Users complain, product managers prioritize, and engineers fix issues in bursts.

Startup B treats feedback as data. Every rejection, every edit, every approval is automatically captured, structured, and used to fine-tune outputs.

After six months, Startup A is still relying on manual iteration. Startup B has thousands of micro-improvements baked into the product, compounding daily. Guess who has the moat?

The compounding feedback → model → product cycle

The most successful AI companies design for a self-reinforcing loop:

User interacts with output.

Approves, edits, rejects, or escalates.

Feedback is captured.

Not just “thumbs up/thumbs down,” but granular corrections (what changed, why it changed).

Model improves.

Fine-tuning, prompt optimization, or rule updates informed by that feedback.

Product improves.

Outputs get better, trust builds, reliance deepens.

Loop restarts, stronger.

Better product → more usage → more feedback → even better product.

This isn’t a nice-to-have. It’s the operating system of an AI company. Without it, you’re just a thin wrapper on an API. With it, you’re building a defensible system that gets smarter every day your users engage.

Case example: AI sales coaching

One AI sales coaching platform built their moat not by having the flashiest demo, but by capturing corrections from thousands of sales reps. Every time a rep adjusted a suggested response, the system logged it. Over time, those corrections created a dataset of what “good” sales language looked like in specific industries.

Fast forward two years: competitors could copy the idea, but they couldn’t copy the millions of nuanced feedback datapoints that had shaped the model. That feedback loop became the company’s moat and their adoption deepened because reps noticed the AI “learning” from their corrections.

How to institutionalize feedback

Design UI for corrections. Don’t hide feedback behind obscure buttons. Make it effortless for users to accept, reject, or edit outputs.

Example: Approve/reject toggles, inline editing, or one-click escalation.

Capture structured data. Don’t just log that feedback happened. Log what changed. Was a number corrected? A clause rewritten? A tone adjusted? Structure creates trainable signals.

Close the loop visibly. Show users that their feedback makes the system better.

Example: “Thanks for correcting this. Future outputs in this scenario will adapt accordingly.”

Prioritize critical feedback. Not all feedback is equal. Prioritize corrections in high-risk or high-value jobs (compliance, contracts, finance) over low-stakes tweaks.

Automate improvement cycles. Build pipelines that continuously feed structured feedback back into prompts, fine-tuning, or guardrails. Manual cycles are too slow.

Trust grows when feedback matters

There’s another hidden benefit: when users see their corrections lead to visible improvements, trust grows. They stop thinking of your AI as a black box and start seeing it as a partner that learns from them. This transforms the relationship from “I don’t trust this tool” to “I’m training this tool for my needs.” That psychological shift is the foundation of durable adoption.

The risk of ignoring feedback

I’ve also seen the opposite. One AI company launched with a flashy demo, gained hype, but never invested in feedback loops. Users encountered errors, corrected them, but nothing changed. Over time, frustration mounted: “I keep teaching this thing, but it never learns.” Churn skyrocketed. Within a year, the company was dead.

Ignoring feedback in AI isn’t just a missed opportunity. It’s a slow death.

At this stage, you may feel pressure to chase more features, more users, more distribution. But ask yourself: “Am I capturing and compounding the most valuable asset I have, my users’ feedback?” If the answer is no, you’re building a demo, not a product.

The companies that survive this AI wave will be the ones that institutionalize feedback → model → product loops so deeply that every single interaction strengthens their moat. That’s not just how you find PMF. That’s how you hold onto it.

Conclusion: Building AI Products That Endure

Finding PMF in AI is not about chasing scale for its own sake or being the loudest demo on launch day. It’s about proving, over and over again, that your product creates undeniable value in the places where pain is sharpest and trust matters most.

The founders who succeed won’t be those who try to boil the ocean, but those who start narrow, design for edge cases, and build defensibility into their product from day one. They’ll be the ones who obsess over trust, who measure adoption depth instead of vanity, and who treat distribution not as a growth lever but as part of the product itself.

The lesson is simple, but not easy: AI punishes shortcuts. It rewards discipline, clarity, and relentless focus on outcomes. If you treat PMF as a moving target, if you build trust as carefully as you build features, if you make feedback your moat and workflows your distribution, you will earn the right not just to launch, but to last.

The AI landscape is crowded, noisy, and unforgiving. But it is also wide open for those willing to approach it with precision. The difference between fading into the noise and building something enduring will not come from ambition or hype, it will come from the discipline of fit.

If you want to master building AI products that will last for the decades to come, consider joining Miqdad’s 6-week AI PM Certification with $500 off. Book here.

Thanks for sharing this practical 10 step framework. Super impactful.

I like how you phrase that PMF isn't a destination, but a company's ability to learn faster than the frustrations of their users mount. You move beyond merely adding features and begin creating a product that evolves with your users. This way, achieving product-market fit becomes a sustainable rhythm you can maintain.