The Dawn of AI Agents: What Really Makes Them Work In Practice

What Most Teams Get Wrong With 2026’s Most In-Demand Skillset & Lessons Learned from AI Agent Implementations.

Welcome to NEW ECONOMIES, where we decode the technology trends reshaping industries and creating new markets. Whether you’re a founder building the future, an operator scaling innovation, or an investor spotting opportunities, we deliver the insights that matter before they become obvious. Subscribe to stay ahead of what’s next alongside 75,000+ others.

P.S. When you become an annual paid subscriber, you automatically access these best-in-class AI tools for free — for 12 months.

This week’s guest post:

Sara Davison and Tyler Fisk are agentic AI practitioners who work hands-on with the technology every day. Outside their work with clients, their focus is on making agentic AI accessible and practical, teaching people and teams how to move from ideas to working agentic workflows.

Over 1,000+ people have learned to build AI Agents through their flagship program on Maven where they guide learners step by step in building agentic workflows over four weeks. The course is immersive and tactical, drawing directly from the frameworks and approaches they use in real-world projects.

Their work blends teaching with practice breaking down complex concepts into methods that students can apply immediately, no matter their starting point.

In this week’s edition, we go beyond the hype of AI agents to uncover what really makes them work in practice. Sara and Tyler share lessons from building real-world workflows, alongside fresh insights from McKinsey’s study of 50+ enterprise implementations.

You’ll learn why the most successful teams don’t start with code, but with understanding hidden layers of work; how evaluation frameworks become the true moat in AI adoption; and why starting small beats premature generalization.

If you’re a founder, operator, or investor navigating the AI shift, this playbook will help you see opportunities others are missing.

Let’s dive in 🚀

Lesson 1: Building AI Agents Isn’t Actually About First Building AI Agents

The Real Work Happens Before You Write a Single Prompt

McKinsey’s analysis of 50+ enterprise implementations revealed something most teams miss entirely: successful AI agents aren’t built on technical sophistication. They’re built on workflow understanding.

We don’t start with “let’s build an AI agent.” We start with “let’s understand how work actually gets done here.”

The Fundamental Disconnect

When we embed with teams, we discover the same pattern repeatedly: the documented process and the actual work are two completely different things.

Take a common scenario: a client with what appeared to be a straightforward workflow. Their official documentation showed a clean, linear process. But after shadowing their teams, we discovered:

The real decisions happened in 30-second informal conversations between departments

Critical assessments relied on pattern recognition developed over years, held in people’s minds and never written down

When the system flagged edge cases, experienced team members had a completely undocumented workaround involving multiple data sources and judgment calls

None of this was in their process documentation. All of it was essential for the AI Agents to actually work.

Why Most Teams Build the Wrong Thing

Most AI Agent implementations fail because teams mistake surface procedures for operational reality. They build agents based on documentation, happy paths, and isolated tasks instead of the interconnected workflow ecosystem where real work happens.

This creates agents that work in demos but break in real life. The technology isn’t the problem—the understanding of operational reality is.

The Four Layers of Work Intelligence That Define What You Should Build

Before you write a single system instruction, you need to map these four layers of how work actually gets done:

Layer 1: Surface Procedures - The official playbook or SOP (where most AI projects stop).

Layer 2: Operational Reality - The workarounds and “we actually do it this way” rules that reveal why experienced workers route certain cases differently based on context.

Layer 3: Contextual Intelligence - The intuition experts develop after years on the job, defining what your agent actually needs to be good at.

Layer 4: Cultural DNA - The values and norms that shape decision-making, determining how your agent should behave, not just what it should do.

The Pre-Build Discovery Process That Actually Works

Here’s the tactical approach that determines what kind of agent you should build and whether you should build one at all:

Embed During Crisis Points

Don’t observe during normal operations. Shadow users when systems are down, when they’re handling their most complex cases, when the documented process completely fails them. That’s when you see what actually matters.

Map the Invisible Network

Document who people actually ask when they’re stuck & what those people say in return. Track the informal communication patterns. In one engagement, we discovered that the internal decision making process around client pitches was entirely undocumented. That insight completely changed what we built.

Capture the “Why” Behind Decisions

Don’t just document what people do, but understand their decision trees. When an expert says “this doesn’t feel right,” dig into what patterns they’re recognizing. This becomes the intelligence your agent needs to replicate, not just the tasks it needs to complete.

What This Changes About What You Build

When you understand work at this level, you don’t just build better agents, you build fundamentally different systems.

Instead of task automation, you codify the secret sauce of how work is done. Instead of process replication, you build decision support that feels like it understands the business.

More importantly, you capture the competitive advantage that’s been locked in your top performers’ heads and scale it across the entire operation.

This discovery process isn’t just prep work.

It’s strategy work.

What you discover in these layers determines not just how to build your agent, but what business problems you’re actually solving.

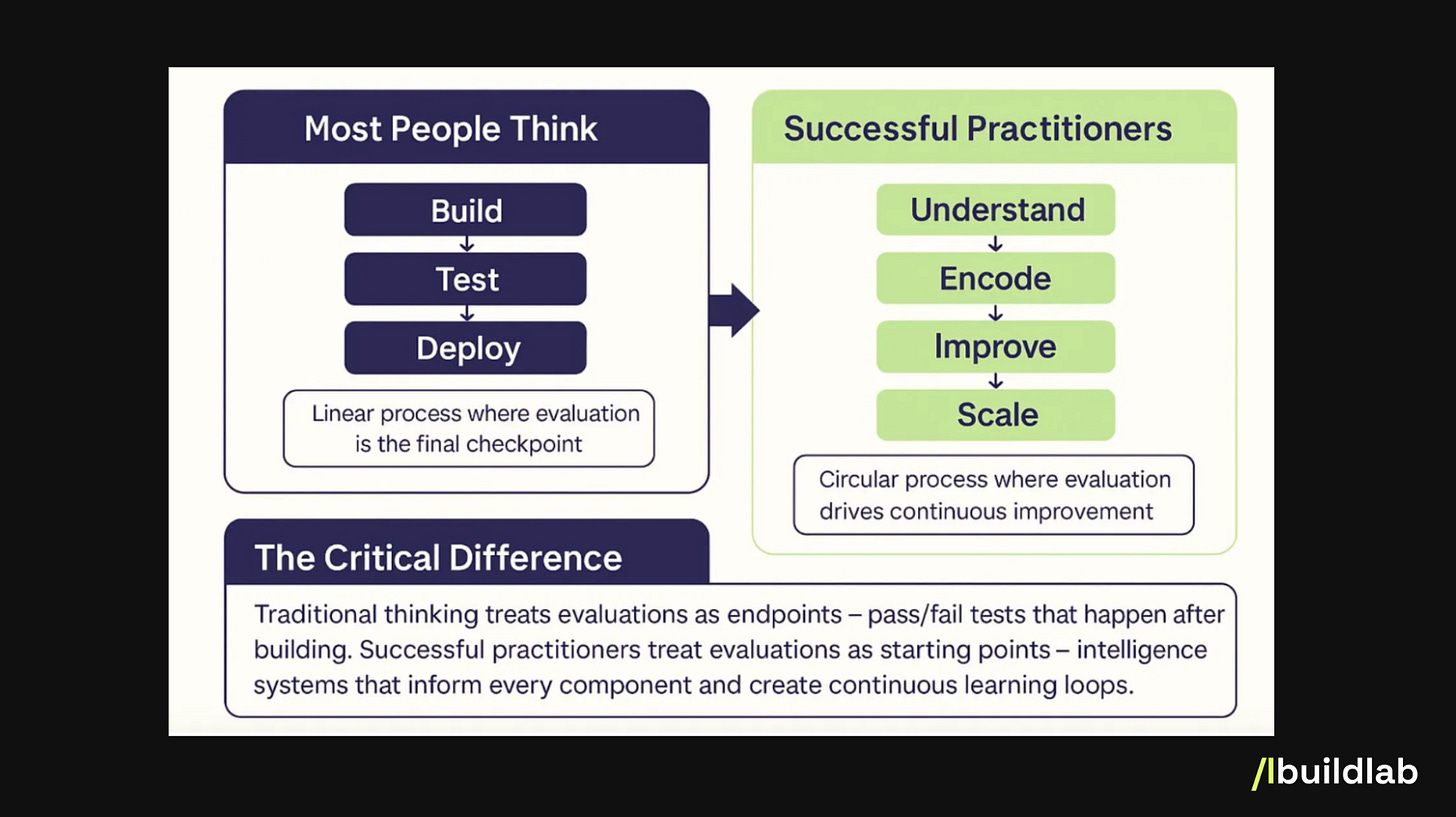

Lesson 2: Evaluation-First Beats Agent-First

Most Teams Build Backwards (And Some Don’t Even Know What They’re Missing)

Here’s the approach most teams take:

Build the agent → Test with a few examples → If it “looks good,” deploy it → Wonder why it breaks in production.

McKinsey’s research identified evaluation as important for building trust and preventing failures. But what we’d really like to call out is that evaluation isn’t just quality control, it’s how you capture the intelligence that makes your agents actually work.

What Are Evaluations, Actually?

Evaluations (or “evals”) are systematic ways to measure whether your AI system produces good outputs.

And yes, while they are quality control used in AI implementations most people stop there and treat them like a final checkpoint: “Does this work well enough to ship?”

But here’s what successful practitioners understand:

Your evaluation work IS your agent strategy.

The Critical Insight Most Miss

When AI agents operate autonomously, you’re not just risking poor performance, you’re creating organizational liability with systems you can’t predict or control.

Traditional software fails predictably.

AI agents can fail creatively, in ways you never anticipated.

Customer service agents giving incorrect information.

Document processing systems misclassifying critical data.

Sales support tools making pricing errors that erode margins.

All operating at scale before anyone notices the pattern.

Why Most Teams Get This Wrong

Most teams approach evaluation as an afterthought:

Write some prompts

Test with a few examples

If it “looks good,” deploy it

Wonder why it breaks in production

But here’s what you should be doing:

Define what “good” actually looks like in your domain

Create systematic ways to measure that quality

Test against hundreds of scenarios, not just happy path examples

Use those insights to improve every part of your system

The gap between these approaches is why most AI implementations feel impressive in demos but fall flat in actual reality.

The Three Levels of Evaluation Maturity

Most operate at Level 1 without realizing more sophisticated approaches create competitive advantage:

Level 1: Basic Quality Control (What everyone does)

Generic accuracy metrics and simple pass/fail testing. “Did the agent process documents with 80% accuracy?”

Level 2: Business Value Connection (What works)

Measuring how system performance aligns with real business outcomes. “Did the agent spot the patterns that matter for actual business decisions?”

Level 3: Expertise-Encoding At Scale (Your competitive advantage)

Capturing and scaling the instincts of top performers into structured evaluation systems. This is where evaluation becomes strategy.

Why Level 3 Changes Everything

Say you work with a team where the top performer can instantly spot problematic cases. Understanding why doesn’t just create a test case, that insight becomes the intelligence that informs your AI Agent design:

System instructions encoded with expert decision patterns

Context selection highlighting the data points experts actually use

Training examples demonstrating expert-level pattern recognition

This creates circular intelligence:

Better evaluations reveal better approaches, which create better outputs, which teach you better evaluation criteria.

Evals Are Your Moat …

Anyone can access the same AI models, so if the underlying technology isn’t the moat, what is?

The commoditization of AI capability means competitive advantage shifts to evaluation expertise.

Your evaluation framework captures and scales:

What separates your best performers from average ones

The difference between best practices and disasters

How to consistently create exceptional outcomes instead of mediocre results

This isn’t just measurement; it’s systematic capture of organizational intelligence that becomes impossible for competitors to replicate. Through this process, you’re capturing and scaling your secret sauce.

What This Means Tactically

→If you’re currently testing AI outputs through ‘vibe checks’ and hoping they work, you need systematic evaluation frameworks that capture domain expertise.

→ If you’re measuring basic accuracy metrics, you need to identify what exceptional performance actually looks like in your specific context.

→If you’re treating evaluations as final quality checks, you need circular systems where evaluations drive continuous improvement.

Lesson 3: Premature Generalization Kills Good Agents

The “Build Once, Use Everywhere” Trap

McKinsey’s research highlighted the importance of reusable components for driving efficiency at scale. We say yes, AND timing is important. Here’s the timing trap most teams fall into: they try to build “reusable” from day one and end up with generic tools that work poorly everywhere.

We see this pattern constantly: teams get excited about AI’s potential and immediately think “how can we make this work across all our departments?” They build broad solutions instead of exceptional ones.

Why Starting Generic Guarantees Mediocrity

Here’s what actually works:

Build for one person or one teams specific workflow first. Make their day 10x better. Then extract the patterns that can apply elsewhere.

The reason is simple: you can’t generalize what you don’t deeply understand. When you try to build for “everyone” from the start, you’re building for no one in particular.

The Intelligence Capture Problem

Every high-performing workflow contains specific intelligence: the edge case handling, the contextual decisions, the “this doesn’t feel right” pattern recognition that experts develop over years.

When you build generically, you miss this intelligence entirely. You end up with agents that handle the obvious stuff but fail on anything requiring judgment.

But when you build for one specific expert first, you capture their decision-making patterns. That intelligence becomes the foundation for everything else you build.

This approach doesn’t just create better AI agents. It creates competitive advantages that are hard to replicate. Your competitors can copy your technology, but they can’t copy the specific workflow intelligence you’ve captured from your best performers.

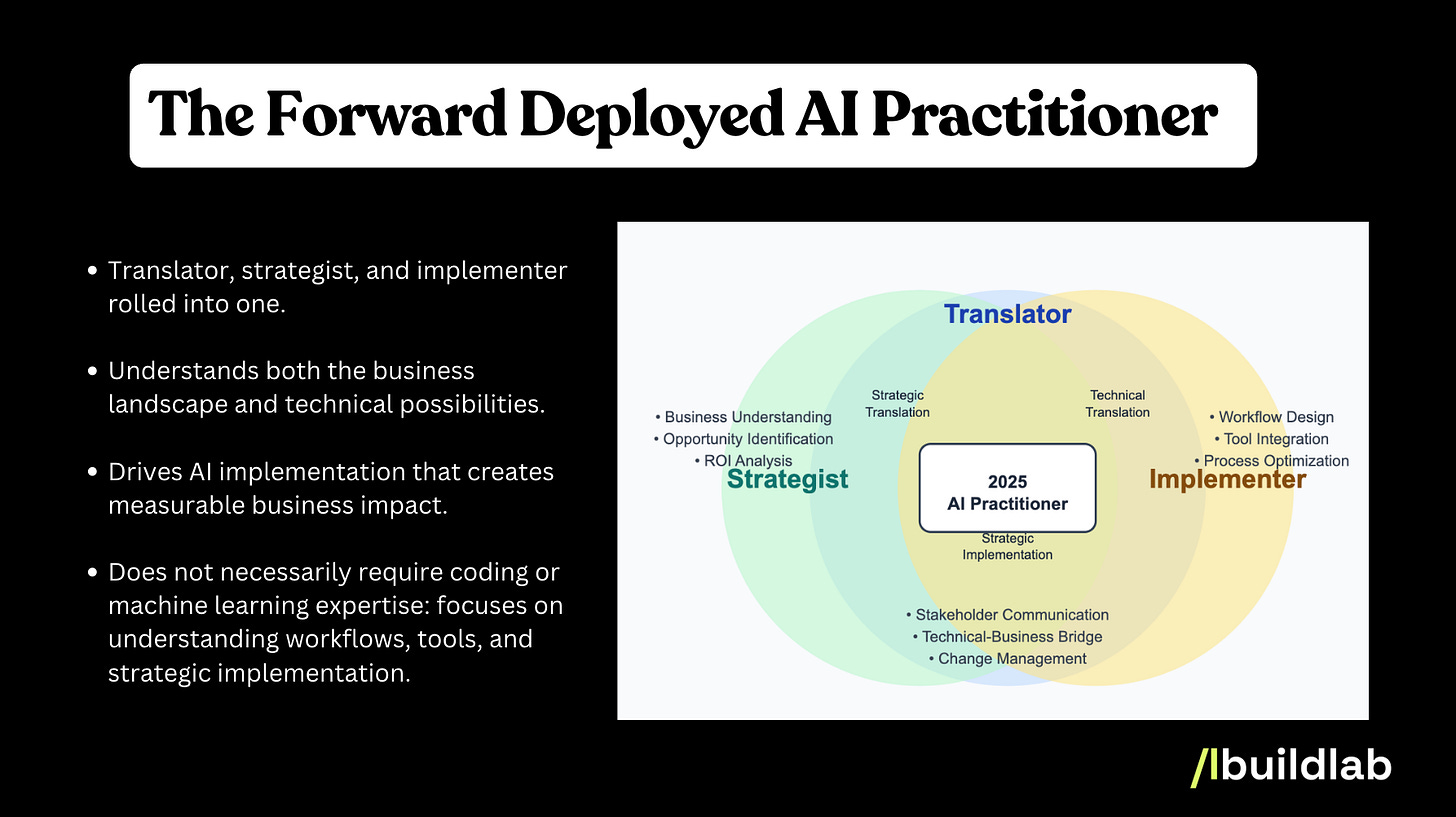

The 2025-2026 Skillset Opportunity

YC’s job board shows 100+ startups hiring for “Forward-Deployed Engineer” roles up from zero just three years ago. But here’s what they’re really looking for and what we’ve been calling the New AI Practitioner (recently the Forward Deployed AI Practitioner) long before it had an official name.

Early in building AI agents, we saw the same pattern again and again: slick demos that collapsed in real-world use. Consultants sat in conference rooms gathering requirements, but the real workflows — full of edge cases and judgment calls — happened on the floor.

So we embedded differently.

We shadowed the person handling the weirdest cases.

We designed for one critical workflow until it was exceptional.

Only later did we realize Palantir had formalized this as “Forward-Deployed Engineering.” But for us, it was born of necessity: you can’t build exceptional agentic workflows without deeply understanding the work itself.

The Forward-Deployed AI Practitioner

Palantir’s forward-deployed engineers didn’t sell demos — they embedded with teams until they understood the job from the inside. Then they returned with systems that reflected reality, not templates. That’s what triggered the “take my money” moment.

What They Actually Do?

Secret Sauce Extraction

They uncover what makes domain experts exceptional — the tacit knowledge manuals never capture.Surfacing Decision Logic

Experts often can’t explain why they know something is off. Practitioners break down those instincts into patterns and decision trees that can be scaled.Reality-Based System Design

Instead of designing from documents, they build from embedded observation, mapping workflows as they actually unfold — failures, exceptions, and all.

Why This Triggers the “Take My Money” Effect

When systems are designed from this level of understanding, they don’t feel generic. They feel like they were built by someone who gets the work. The result isn’t just automation — it’s the ability to scale best practice and capture competitive advantage.

Why This Role Matters Now?

This isn’t just another “AI job” trend. It sits at the intersection of three accelerating forces:

AI Commoditization: Models are widely available — expertise in implementation is the differentiator.

The Implementation Gap: Most organizations are far behind in adoption.

Workflow Complexity: Modern work is layered with nuance that only properly designed AI can handle.

What It Looks Like in Practice:

Forward-Deployed AI Practitioners don’t start with “What can AI do?” They start with “What does excellence look like in this domain?” — and then work backward to design systems that scale it.

That’s the real market signal. And it’s only getting louder.

Sara & Tyler’s flagship AI Agents Program is designed to take founders, operators and teams from wherever they are to building agentic workflows in 4 weeks with a verified methodological roadmap now validated with over 1,000+ learners. NEW ECONOMIES readers can enjoy an exclusive 15% code on the program’s 2025 pricing ($895) ahead of the program price increase of $995 in 2026.

This pricing includes a complimentary repeat of a future cohort to further embed learning and application.

Awesome piece. Thanks team, so many takeaways, but for me this hit hard "you can’t generalize what you don’t deeply understand" - it's why a slow and steady approach is the way to critical thinking. 🙏

Most firms claiming to be “AI-first” are really just “LLM-assistant-first”, and that’s a dangerous illusion.

This article nails it, but the uncomfortable truth is that practitioners like me spend more time re-educating senior executives than actually building. I took courses with Sara and Tyler (the BEST in the market) and I use their frameworks daily. However, in global organizations, progress is limited to using rigid LLMs and shallow agentic capabilities. Leaders mistake pilots and demos for transformation, while the real moat, EVALS and workflow intelligence, barely makes it past PowerPoint.

If we want to break the cycle, leadership must stop treating AI like a compliance checkbox and start treating it as a cultural reset, otherwise competitors will be encoding expertise at scale while they’re still running “safe” sandbox experiments.

The hopeful part? People like Sara and Tyler are playing the role that great thought leaders throughout history have always played, sparking cultural shifts by equipping a new generation of practitioners with knowledge and frameworks that ripple outward. That’s how we move from AI hype to lasting transformation.